August 28, 2025

iFLYTEK Debuts at World Robot Conference 2025: Intelligent Interaction Technology Leads New Wave of Embodied Intelligence

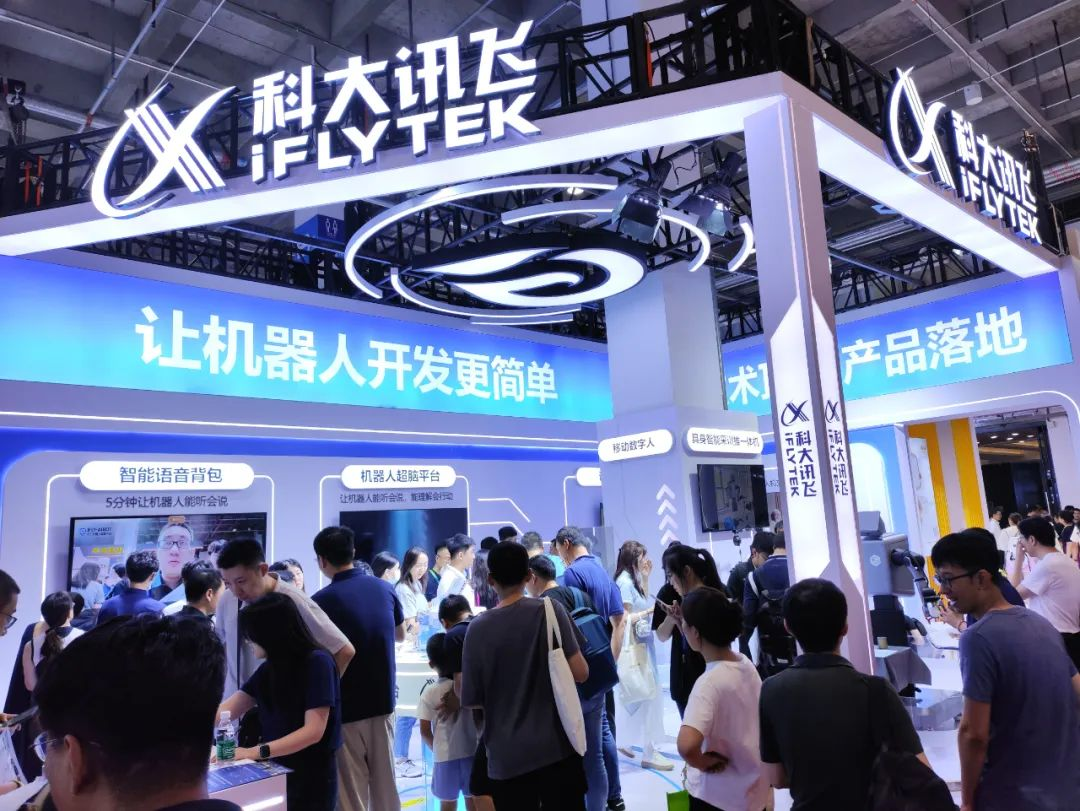

On August 8, the World Robot Conference 2025 kicked off in Beijing under the theme “Making Robots Smarter, Making Embodied Agents More Intelligent” This year’s conference brought together more than 200 leading robotics companies and over 400 industry experts from around the globe to chart a course for innovation in the robotics sector. iFLYTEK showcased a series of cutting-edge products, including its Intelligent Voice Backpack, Multilingual AI Transparent Screen, and Mobile Digital Human, demonstrating the latest breakthroughs and practical applications of intelligent interaction technologies in robotics.

Intelligent Voice Backpack: Enabling Robots to “Understand and Act”

At iFLYTEK’s exhibition area, the combination of the Intelligent Voice Backpack and the G1 robot became a star attraction. In live demonstrations, the G1—equipped with the backpack—not only performed complex movements such as Cyber Tai Chi with precision but also engaged in emotionally rich social interactions.

G1 Robot Equipped with the Intelligent Voice Backpack

The Intelligent Voice Backpack is an all-in-one solution designed for rapid integration of voice interaction into robots. It consists of a perception module and a fully functional voice backpack, offering plug-and-play convenience with no structural modifications required. This solution transforms the traditional robot development model: developers can instantly equip robots with advanced voice capabilities without changing any hardware, dramatically shortening the R&D cycle. Its core strength lies in the integration of multimodal noise reduction and deep semantic understanding, allowing it to accurately capture sound even in noisy environments and thoroughly interpret user intent. At the same time, it seamlessly links voice commands with motion control and operational logic, ensuring smooth execution from instruction to action.

Whether on the factory floor or in busy service settings, robots equipped with the Intelligent Voice Backpack can achieve highly accurate voice interaction. This significantly expands the potential applications of robotics and accelerates the adoption of intelligent interaction technologies in real-world scenarios.

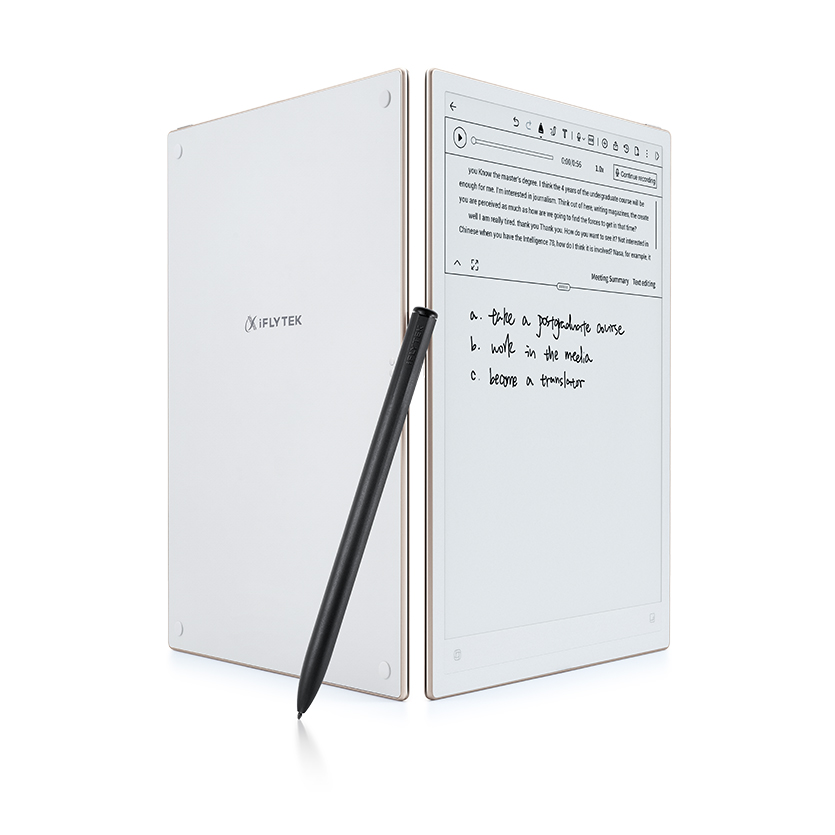

Multilingual AI Transparent Screen: An “Intelligent Bridge” Breaking Down Language Barriers

On the other side of the booth, the double-sided Multilingual AI Transparent Screen attracted significant attention. When a Japanese visitor spoke to staff in Japanese, the screen instantly provided real-time two-way translation, prompting exclamations of amazement: “It’s incredible! I never imagined a transparent screen could pull off real-time conversation translation.”

This capability is made possible through the integration of multilingual speech recognition, real-time translation, iFLYTEK SPARK Large Model, and multimodal noise reduction technology. The system supports two-way real-time translation in 15 languages—including Chinese, English, French, German, Russian, Japanese, Korean, Thai, Vietnamese, and Arabic—and also offers a digital human customer service interaction mode.

The product has already been deployed in a variety of settings such as airports, subways, hotels, shopping malls, scenic spots, government service centers, and financial institutions. By greatly enhancing service efficiency in multilingual environments, it serves as a true “intelligent bridge” for cross-cultural communication.

Mobile Digital Human: Redefining Intelligent Guided Interaction

“Which booth do you think is the most interesting today?” At the exhibition, crowds quickly gathered around the interactive zone of the Mobile Digital Human “Xiaoyu.” Visitors lined up eagerly to converse with this “high-EQ” digital assistant. Tilting her head thoughtfully for a moment before breaking into a smile, Xiaoyu responded: “Every booth has something special to offer—just like every person has their own unique spark.” Her lifelike expressions and natural tone often drew laughter and engagement from the audience.

Mobile Digital Human “Xiaoyu”

This “mobile intelligent interactive entity” features a highly realistic humanoid avatar displayed on a 55-inch OLED transparent screen mounted on a movable platform. Equipped with 360° acoustic positioning and multimodal interaction technology, the system accurately detects the viewer’s position and identifies voice commands. Integrated with the SPARK Large Model and customizable knowledge bases, it delivers context-aware understanding and intelligent responses. Designed for end-to-end broadcasting, control, and deployment, the solution has already been adopted in museums, service halls, and other public spaces. By moving freely and offering immersive interaction, it elevates the experience of visitor guidance and brand communication.

Full-Stack Interaction Modules: Building the “Perceptual Neural Network” for Robots

Dedicated to advancing intelligent robot interaction, iFLYTEK also showcased a comprehensive suite of interaction modules, including a multimodal development and evaluation kit for robotics, the Robot Super Brain Board, and an offline large-model interaction module. Together, these components form a “perceptual neural network” for robots, allowing for more accurate and efficient understanding and response to user needs.

“Most leading intelligent robot manufacturers in China have already adopted the integrated hardware-software solutions offered by the Robot Super Brain Platform,” stated Liu Kewei, General Manager of iFLYTEK’s Robot Super Brain Platform. “Moving forward, the platform will continue to advance into deeper realms of embodied intelligence—enabling robots to progress from simply understanding commands to active thinking and human-like comprehension.”

Embodied Intelligence All-in-One Training Machine: Unlocking a New Paradigm for Flexible Manufacturing

In the field of embodied intelligence training, the all-in-one data collection, training, and inference machine exhibited by LindenBot—an ecosystem company of iFLYTEK—has drawn significant attention. The device offers notable advantages such as user-friendly operation, broad application scenarios, and seamless integration of data collection, training, and inference. It meets the training and deployment requirements of most robotic models on the market, positioning it as a versatile industry-grade solution.

Ji Chao, CEO of LindenBot and Chief Scientist of iFLYTEK Robotics, highlighted a key challenge in embodied intelligence: the trade-off between generalization capability and initial efficiency. The solution, he noted, lies in equipping robots with end-to-end abilities—from task comprehension and action planning to execution—through multimodal large models, thereby overcoming bottlenecks in flexible manufacturing. LindenBot’s strategy builds on iFLYTEK’s multimodal pre-training foundation using a layered architecture and a “one brain, multiple cerebellums” design. This allows for rapid adaptation across scenarios while balancing generalization with high efficiency. “At this stage, the most viable approach may indeed be this combination of a central cognitive system with specialized executive modules,” Ji emphasized.

From core speech interaction technologies to multi-scenario applications, iFLYTEK is guided by the vision of “making robot development simpler.” Through continuous technological iteration and scenario-driven innovation, iFLYTEK is helping accelerate the robotics industry toward greater intelligence and real-world applicability—pioneering a new wave of embodied intelligence.

More News

-

Industry First: Bluey and Other Classic English Animations Launch Exclusively on iFLYTEK AI Learning Machine

Industry First: Bluey and Other Classic English Animations Launch Exclusively on iFLYTEK AI Learning MachineJanuary 14, 2026

-

iFLYTEK Showcases at the 2025 China International Travel Mart

iFLYTEK Showcases at the 2025 China International Travel MartJanuary 08, 2026

-

2025 World University Presidents Forum Hosts "AI and Scientific Research Paradigms Shift"

2025 World University Presidents Forum Hosts "AI and Scientific Research Paradigms Shift"November 25, 2025

-

iFLYTEK Upgrades Simultaneous Interpretation Model and Launches iFLYTRANS Headsets in Shanghai and Dubai

iFLYTEK Upgrades Simultaneous Interpretation Model and Launches iFLYTRANS Headsets in Shanghai and DubaiOctober 31, 2025

-

Spotlight on DMEXCO 2025: Join iFLYTEK AI Marketing to Shape the AI-Driven Future of Digital Marketing

Spotlight on DMEXCO 2025: Join iFLYTEK AI Marketing to Shape the AI-Driven Future of Digital MarketingOctober 22, 2025